Recently, Microsoft added some extra features to the IoTHub routing abilities:

- Support for routing using the message body

- Support for Blob Storage as endpoint

In this blog, we will look at both features using the Visual Studio 2017 extension called the IoT Hub Connected Service, which is updated also.

But first, let’s look at the new Blob Storage endpoint.

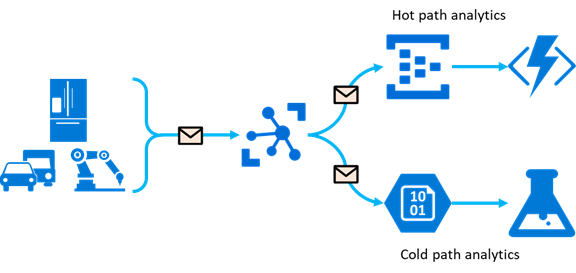

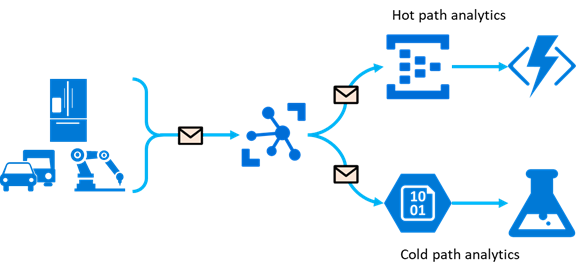

Sending telemetry to a Blob Storage container is a simple and efficient way for cold path analytics:

Until recently, there were a few ways to do this:

- Sending IoTHub output to a Stream Analytics job, which filled some blob

- Sending IoTHub output to an Azure Function, which filled some blob

- Making use of the IoT Hub ability to receive blobs

The first two ways can be done using extra Azure resources so additional costs are involved. The third one is only used in very specific circumstances.

The new Blob Storage endpoint is a simple but very powerful way of making Cold path analytics available, right from the IoTHub.

Doorgaan met het lezen van “Azure IoTHub routing revisited, Blob Storage Endpoints”