Microsoft Fabric is an all-in-one analytics solution for enterprises that covers everything from data movement to data science, Real-Time Analytics, and business intelligence.

For IoT Developers, this is a great addition to our Azure IoT resources toolkit because ingesting streams of IoT data is supported too.

In this series of blog posts, we have already learned about integrating with the Azure IoT Hub.

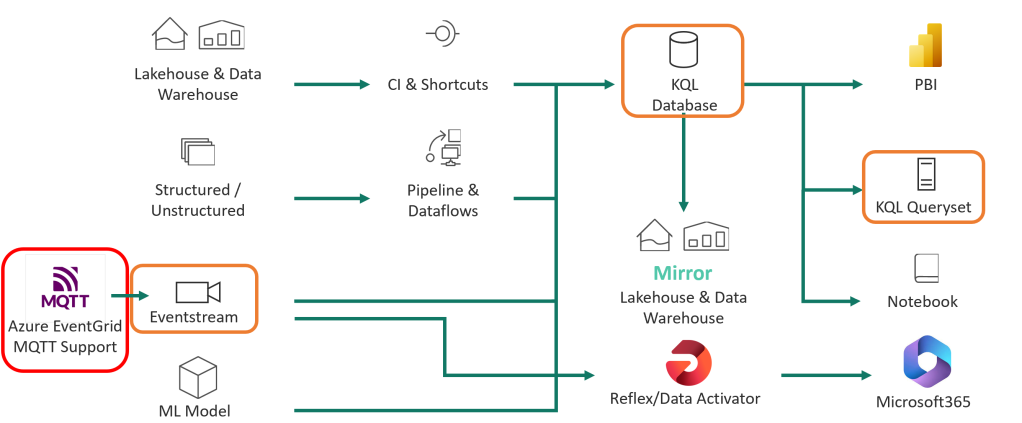

For my recent presentation at the Data Toboggan Winter edition, I wanted to integrate via the MQTT protocol using the new Azure EventGrid MQTT support:

In this blog post, we learn how to integrate using a little extra help offered by an Azure Function.

Doorgaan met het lezen van “Microsoft Fabric real-time analytics exploration: EventGrid MQTT Broker integration”